AI & Decision Governance

AI doesn't introduce new intent. It accelerates existing decision logic.

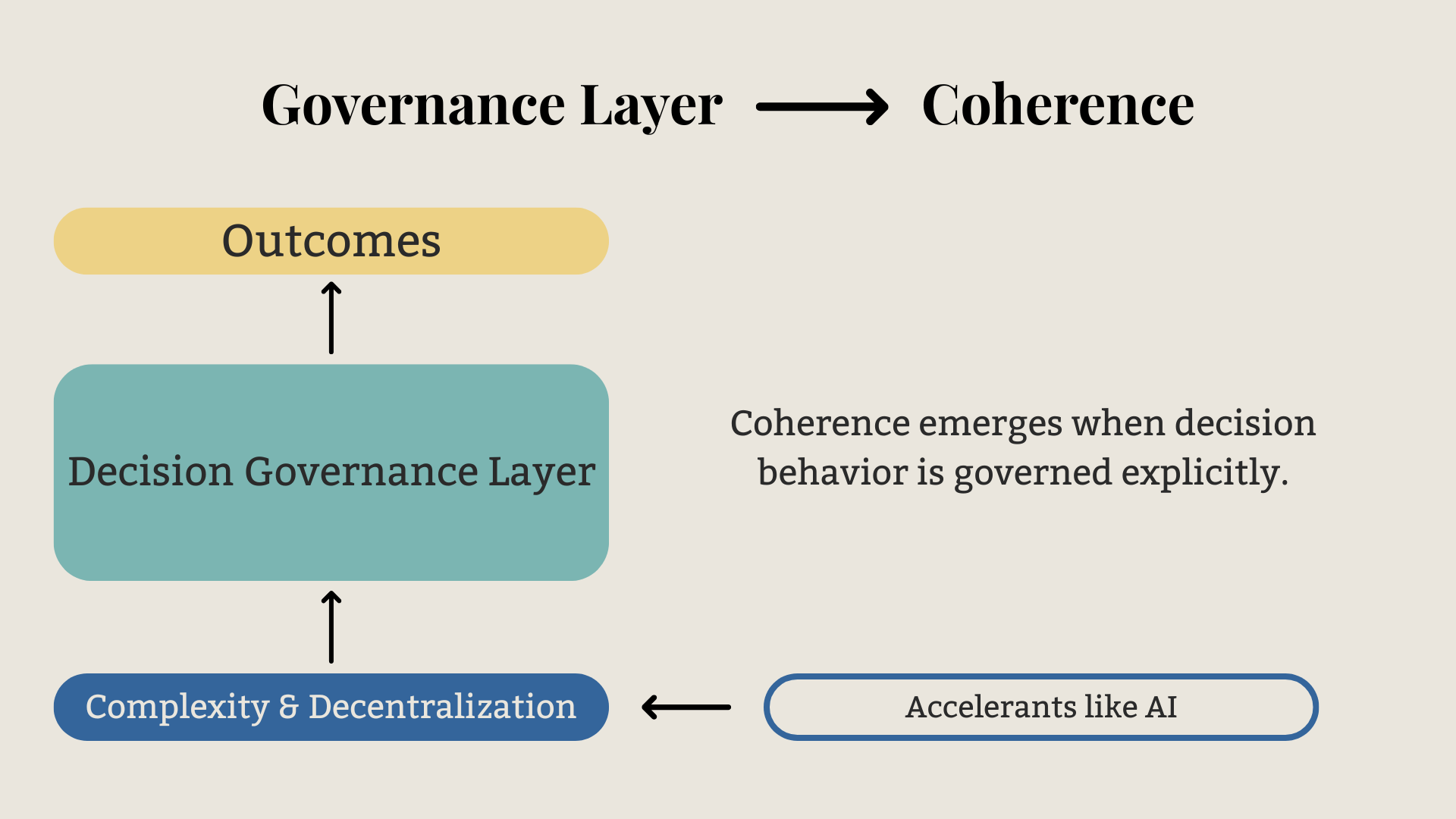

As AI embeds into workflows, decisions execute continuously. Escalation windows shrink. The cost of correction increases. Small misalignments compound at machine speed. And because AI inherits judgment from the humans and systems that built it, dysfunction doesn't disappear—it scales.

Most AI governance operates too late.

Traditional controls focus on outputs: compliance, explainability, monitoring. But the primary risk sits upstream—in what decisions AI is allowed to make, whose judgment it inherits, and which signals it amplifies.

By the time you're monitoring outputs, misalignment is already executing at scale.

We govern AI before it accelerates drift.

Stealth Dog measures decision capacity upstream—before automation scales it. We identify which decision patterns are high-signal, where dysfunction distorts judgment, and whose authority should govern automated systems. This makes decision behavior visible early, so you can define what decisions AI is permitted to make, whose judgment it inherits, and where human oversight must remain.

When decision signal is governed, AI stabilizes performance. When it's not, AI accelerates fragility.

Ready to Learn More?

Learn how we measure decision signal → What We Measure

See why traditional analytics miss this → Signal vs. Noise

Let’s talk→ Contact